University certificate

Accreditation/Membership

The world's largest faculty of information technology”

Introduction to the Program

A comprehensive and 100% online program, exclusive to TECH, with an international perspective supported by our membership with The Society for the Study of Artificial Intelligence and Simulation of Behaviour"

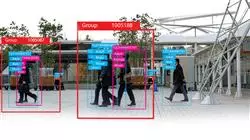

Computer Vision has become a key tool for the development of advanced solutions in multiple areas of Computer Science. Its evolution has enabled digital systems to recognize, analyze, and interpret images with precision, optimizing processes in sectors such as industry, healthcare, agriculture, and surveillance. The growing need for intelligent automation and real-time visual analysis has driven the demand for professionals capable of designing and implementing solutions based on this technology. In this context, it is essential to have academic programs that address in-depth the theoretical foundations and practical applications of this field.

In response, TECH Global University launches an innovative Master's Degree in Computer Vision that stands out for its technical, rigorous, and up-to-date approach. The academic itinerary will delve into human visual perception and the historical evolution of the discipline, all the way to the acquisition and processing of images through optical systems. Additionally, the physical and technical foundations of image capture, lighting, and processing will be analyzed, providing professionals with a solid foundation to understand its application in industrial, medical, space, and commercial sectors. In this way, graduates will acquire advanced competencies to design and implement automated, effective, and adaptable visual solutions in complex technological environments.

Furthermore, TECH offers a convenient 100% online academic environment that allows students to access all content from anywhere and at any time. This flexibility is particularly useful for active professionals or those who need to balance their studies with other activities. Additionally, the program employs its disruptive Relearning system to ensure a natural and progressive assimilation of concepts, without the need to invest long hours of study.

Thanks to TECH's membership with the Society for the Study of Artificial Intelligence and Simulation of Behaviour (AISB), students will have access to digital publications such as AISB and Discussions, as well as a weekly newsletter with news and job offers. Additionally, they will enjoy discounted rates for AISB and ECAI conferences, receive travel support, and training to create local groups.

You will create Computer Vision projects focused on innovation, efficiency, and process automation”

This Master's Degree in Computer Vision contains the most complete and up-to-date university program on the market. Its most notable features are:

- The development of case studies presented by experts in Computer Vision

- The graphic, schematic, and practical contents with which they are created, provide scientific and practical information on the disciplines that are essential for professional practice

- Practical exercises where self-assessment can be used to improve learning

- Its special emphasis on innovative methodologies in Computer Vision

- Theoretical lessons, questions to the expert, debate forums on controversial topics, and individual reflection assignments

- Content that is accessible from any fixed or portable device with an internet connection

You will deepen your understanding of training algorithms for digital image processing and video analysis, using methods based on machine learning”

The faculty includes professionals from the Computer Vision field, who share the experience of their work in this program, as well as recognized experts from leading societies and prestigious universities.

The multimedia content, developed with the latest educational technology, will provide the professional with situated and contextual learning, i.e., a simulated environment that will provide an immersive learning experience designed to prepare for real-life situations.

This program is designed around Problem-Based Learning, whereby the student must try to solve the different professional practice situations that arise throughout the program. For this purpose, the professional will be assisted by an innovative interactive video system created by renowned and experienced experts.

You will apply convolutional neural networks, generative models, and visual recognition systems to real-world institutional scenarios"

The distinctive Relearning system powered by TECH will allow you to learn at your own pace, without relying on external teaching constraints"

Syllabus

The syllabus will cover everything from the principles of human visual perception to the evolution and consolidation of Computer Vision as a technological discipline. Additionally, the syllabus will delve into optical systems, image capture and lighting processes, as well as the technical fundamentals of digital image processing. This foundation will enable professionals to deeply understand the functioning and application of these technologies across multiple fields. Thereby, graduates will be prepared to develop automated, precise, and effective visual solutions in highly technological environments.

You will master the use of specialized frameworks and programming environments such as TensorFlow”

Module 1. Computer Vision

1.1. Human Perception

1.1.1. Human Visual System

1.1.2. The Color

1.1.3. Visible and Non-Visible Frequencies

1.2. Chronicle of the Computer Vision

1.2.1. Principles

1.2.2. Evolution

1.2.3. The Importance of Computer Vision

1.3. Digital Image Composition

1.3.1. The Digital Image

1.3.2. Types of Images

1.3.3. Color Spaces

1.3.4. RGB

1.3.5. HSV and HSL

1.3.6. CMY-CMYK

1.3.7. YCbCr

1.3.8. Indexed Image

1.4. Image Acquisition Systems

1.4.1. Operation of a Digital Camera

1.4.2. The Correct Exposure for Each Situation

1.4.3. Depth of Field

1.4.4. Resolution

1.4.5. Image Formats

1.4.6. HDR Mode

1.4.7. High Resolution Cameras

1.4.8. High-Speed Cameras

1.5. Optical Systems

1.5.1. Optical Principles

1.5.2. Conventional Lenses

1.5.3. Telecentric Lenses

1.5.4. Types of Autofocus Lenses

1.5.5. Focal Length

1.5.6. Depth of Field

1.5.7. Optical Distortion

1.5.8. Calibration of an Image

1.6. Illumination Systems

1.6.1. Importance of Illumination

1.6.2. Frequency Response

1.6.3. LED Illumination

1.6.4. Outdoor Lighting

1.6.5. Types of Lighting for Industrial Applications. Effects

1.7. 3D Capture Systems

1.7.1. Stereo Vision

1.7.2. Triangulation

1.7.3. Structured Light

1.7.4. Time of Flight

1.7.5. Lidar

1.8. Multispectrum

1.8.1. Multispectral Cameras

1.8.2. Hyperspectral Cameras

1.9. Non-Visible Near Spectrum

1.9.1. IR Cameras

1.9.2. UV Cameras

1.9.3. Converting From Non-Visible to Visible by Illumination

1.10. Other Band Spectrums

1.10.1. X-Ray

1.10.2. Terahertz

Module 2. Applications and State-of-the-Art

2.1. Industrial Applications

2.1.1. Machine Vision Libraries

2.1.2. Compact Cameras

2.1.3. PC-Based Systems

2.1.4. Industrial Robotics

2.1.5. Pick and Place 2D

2.1.6. Bin Picking

2.1.7. Quality Control

2.1.8. Presence Absence of Components

2.1.9. Dimensional Control

2.1.10 Labeling Control

2.1.11 Traceability

2.2. Autonomous Vehicles

2.2.1. Driver Assistance

2.2.2. Autonomous Driving

2.3. Computer Vision for Content Analysis

2.3.1. Filtering by Content

2.3.2. Visual Content Moderation

2.3.3. Tracking Systems

2.3.4. Brand and Logo Identification

2.3.5. Video Labeling and Classification

2.3.6. Scene Change Detection

2.3.7. Text or Credits Extraction

2.4. Medical Application

2.4.1. Disease Detection and Localization

2.4.2. Cancer and X-Ray Analysis

2.4.3. Advances in Computer Vision given Covid19

2.4.4. Assistance in the Operating Room

2.5. Spatial Applications

2.5.1. Satellite Image Analysis

2.5.2. Computer Vision for the Study of Space

2.5.3. Mission to Mars

2.6. Commercial Applications

2.6.1. Stock Control

2.6.2. Video Surveillance, Home Security

2.6.3. Parking Cameras

2.6.4. Population Control Cameras

2.6.5. Speed Cameras

2.7. Vision Applied to Robotics

2.7.1. Drones

2.7.2. AGV

2.7.3. Vision in Collaborative Robots

2.7.4. The Eyes of the Robots

2.8. Augmented Reality

2.8.1. Operation

2.8.2. Devices

2.8.3. Applications in the Industry

2.8.4. Commercial Applications

2.9. Cloud Computing

2.9.1. Cloud Computing Platforms

2.9.2. From Cloud Computing to Production

2.10. Research and State-of-the-Art

2.10.1. Commercial Applications

2.10.2. What’s Cooking

2.10.3. The Future of Computer Vision

Module 3. Digital Image Processing

3.1. Computer Vision Development Environment

3.1.1. Computer Vision Libraries

3.1.2. Programming Environment

3.1.3. Visualization Tools

3.2. Digital image Processing

3.2.1. Pixel Relationships

3.2.2. Image Operations

3.2.3. Geometric Transformations

3.3. Pixel Operations

3.3.1. Histogram

3.3.2. Histogram Transformations

3.3.3. Operations on Color Images

3.4. Logical and Arithmetic Operations

3.4.1. Addition and Subtraction

3.4.2. Product and Division

3.4.3. And/Nand

3.4.4. Or/Nor

3.4.5. Xor/Xnor

3.5. Filters

3.5.1. Masks and Convolution

3.5.2. Linear Filtering

3.5.3. Non-Linear Filtering

3.5.4. Fourier Analysis

3.6. Morphological Operations

3.6.1. Erosion and Dilation

3.6.2. Closing and Opening

3.6.3. Top_hat and Black hat

3.6.4. Contour Detection

3.6.5. Skeleton

3.6.6. Hole Filling

3.6.7. Convex Hull

3.7. Image Analysis Tools

3.7.1. Edge Detection

3.7.2. Detection of Blobs

3.7.3. Dimensional Control

3.7.4. Color Inspection

3.8. Object Segmentation

3.8.1. Image Segmentation

3.8.2. Classical Segmentation Techniques

3.8.3. Real Applications

3.9. Image Calibration

3.9.1. Image Calibration

3.9.2. Methods of Calibration

3.9.3. Calibration Process in a 2D Camera/Robot System

3.10. Image Processing in a Real Environment

3.10.1. Problem Analysis

3.10.2. Image Processing

3.10.3. Feature Extraction

3.10.4. Final Results

Module 4. Advanced Digital Image Processing

4.1. Optical Character Recognition (OCR)

4.1.1. Image Pre-Processing

4.1.2. Text Detection

4.1.3. Text Recognition

4.2. Code Reading

4.2.1. 1D Codes

4.2.2. 2D Codes

4.2.3. Applications

4.3. Pattern Search

4.3.1. Pattern Search

4.3.2. Patterns Based on Gray Level

4.3.3. Patterns Based on Contours

4.3.4. Patterns Based on Geometric Shapes

4.3.5. Other Techniques

4.4. Object Tracking with Conventional Vision

4.4.1. Background Extraction

4.4.2. Meanshift

4.4.3. Camshift

4.4.4. Optical Flow

4.5. Facial Recognition

4.5.1. Facial Landmark Detection

4.5.2. Applications

4.5.3. Facial Recognition

4.5.4. Emotion Recognition

4.6. Panoramic and Alignment

4.6.1. Stitching

4.6.2. Image Composition

4.6.3. Photomontage

4.7. High Dynamic Range (HDR) and Photometric Stereo

4.7.1. Increasing the Dynamic Range

4.7.2. Image Compositing for Contour Enhancement

4.7.3. Techniques for the Use of Dynamic Applications

4.8. Image Compression

4.8.1. Image Compression

4.8.2. Types of Compressors

4.8.3. Image Compression Techniques

4.9. Video Processing

4.9.1. Image Sequences

4.9.2. Video Formats and Codecs

4.9.3. Reading a Video

4.9.4. Frame Processing

4.10. Real Application of Image Processing

4.10.1. Problem Analysis

4.10.2. Image Processing

4.10.3. Feature Extraction

4.10.4. Final Results

Module 5. 3D Image Processing

5.1. 3D Imaging

5.1.1. 3D Imaging

5.1.2. 3D Image Processing Software and Visualizations

5.1.3. Metrology Software

5.2. Open3D

5.2.1. Library for 3D Data Processing

5.2.2. Characteristics

5.2.3. Installation and Use

5.3. The Data

5.3.1. Depth Maps in 2D Image

5.3.2. Pointclouds

5.3.3. Normal

5.3.4. Surfaces

5.4. Visualization

5.4.1. Data Visualization

5.4.2. Controls

5.4.3. Web Display

5.5. Filters

5.5.1. Distance Between Points, Eliminate Outliers

5.5.2. High Pass Filter

5.5.3. Downsampling

5.6. Geometry and Feature Extraction

5.6.1. Extraction of a Profile

5.6.2. Depth Measurement

5.6.3. Volume

5.6.4. 3D Geometric Shapes

5.6.5. Shots

5.6.6. Projection of a Point

5.6.7. Geometric Distances

5.6.8. Kd Tree

5.6.9. 3D Features

5.7. Registration and Meshing

5.7.1. Concatenation

5.7.2. ICP

5.7.3. Ransac 3D

5.8. 3D Object Recognition

5.8.1. Searching for an Object in the 3d Scene

5.8.2. Segmentation

5.8.3. Bin Picking

5.9. Surface Analysis

5.9.1. Smoothing

5.9.2. Orientable Surfaces

5.9.3. Octree

5.10. Triangulation

5.10.1. From Mesh to Point Cloud

5.10.2. Depth Map Triangulation

5.10.3. Triangulation of Unordered Point Clouds

Module 6. Deep Learning

6.1. Artificial Intelligence

6.1.1. Machine Learning

6.1.2. Deep Learning

6.1.3. The Explosion of Deep Learning. Why Now

6.2. Neural Networks

6.2.1. The Neural Network

6.2.2. Uses of Neural Networks

6.2.3. Linear Regression and Perception

6.2.4. Forward Propagation

6.2.5. Backpropagation

6.2.6. Feature Vectors

6.3. Loss Functions

6.3.1. Loss Functions

6.3.2. Types of Loss Functions

6.3.3. Choice of Loss Functions

6.4. Activation Functions

6.4.1. Activation Function

6.4.2. Linear Functions

6.4.3. Non-Linear Functions

6.4.4. Output vs. Hidden Layer Activation Functions

6.5. Regularization and Normalization

6.5.1. Regularization and Normalization

6.5.2. Overfitting and Data Augmentation

6.5.3. Regularization Methods: L1, L2 and Dropout

6.5.4. Normalization Methods: Batch, Weight, Layer

6.6. Optimization

6.6.1. Gradient Descent

6.6.2. Stochastic Gradient Descent

6.6.3. Mini Batch Gradient Descent

6.6.4. Momentum

6.6.5. Adam

6.7. Hyperparameter Tuning and Weights

6.7.1. Hyperparameters

6.7.2. Batch Size vs. Learning Rate vs. Step Decay

6.7.3. Weights

6.8. Evaluation Metrics of a Neural Network

6.8.1. Accuracy

6.8.2. Dice Coefficient

6.8.3. Sensitivity vs. Specificity / Recall vs. Precision

6.8.4. ROC Curve (AUC)

6.8.5. F1-Score

6.8.6. Matrix Confusion

6.8.7. Cross-Validation

6.9. Frameworks and Hardware

6.9.1. Tensor Flow

6.9.2. Pytorch

6.9.3. Caffe

6.9.4. Keras

6.9.5. Hardware for the Training Phase

6.10. Creation of a Neural Network – Training and Validation

6.10.1. Dataset

6.10.2. Network Construction

6.10.3. Education

6.10.4. Visualization of Results

Module 7. Convolutional Neural Networks and Image Classification

7.1. Convolutional Neural Networks

7.1.1. Introduction

7.1.2. Convolution

7.1.3. CNN Building Blocks

7.2. Types of CNN Layers

7.2.1. Convolutional

7.2.2. Activation

7.2.3. Batch Normalization

7.2.4. Polling

7.2.5. Fully Connected

7.3. Metrics

7.3.1. Matrix Confusion

7.3.2. Accuracy

7.3.3. Precision

7.3.4. Recall

7.3.5. F1 Score

7.3.6. ROC Curve

7.3.7. AUC

7.4. Main Architectures

7.4.1. AlexNet

7.4.2. VGG

7.4.3. Resnet

7.4.4. GoogleLeNet

7.5. Image Classification

7.5.1. Introduction

7.5.2. Analysis of Data

7.5.3. Data Preparation

7.5.4. Model Training

7.5.5. Model Validation

7.6. Practical Considerations for CNN Training

7.6.1. Optimizer Selection

7.6.2. Learning Rate Scheduler

7.6.3. Check Training Pipeline

7.6.4. Training with Regularization

7.7. Best Practices in Deep Learning

7.7.1. Transfer Learning

7.7.2. Fine Tuning

7.7.3. Data Augmentation

7.8. Statistical Data Evaluation

7.8.1. Number of Datasets

7.8.2. Number of Labels

7.8.3. Number of Images

7.8.4. Data Balancing

7.9. Deployment

7.9.1. Saving and Loading Models

7.9.2. Onnx

7.9.3. Inference

7.10. Practical Case: Image Classification

7.10.1. Data Analysis and Preparation

7.10.2. Testing the Training Pipeline

7.10.3. Model Training

7.10.4. Model Validation

Module 8. Object Detection

8.1. Object Detection and Tracking

8.1.1. Object Detection

8.1.2. Use Cases

8.1.3. Object Tracking

8.1.4. Use Cases

8.1.5. Occlusions, Rigid and Non-Rigid Poses

8.2. Assessment Metrics

8.2.1. IOU - Intersection Over Union

8.2.2. Confidence Score

8.2.3. Recall

8.2.4. Precision

8.2.5. Recall-Precision Curve

8.2.6. Mean Average Precision (mAP)

8.3. Traditional Methods

8.3.1. Sliding Window

8.3.2. Viola Detector

8.3.3. HOG

8.3.4. Non Maximal Supression (NMS)

8.4. Datasets

8.4.1. Pascal VC

8.4.2. MS Coco

8.4.3. ImageNet (2014)

8.4.4. MOTA Challenge

8.5. Two Shot Object Detector

8.5.1. R-CNN

8.5.2. Fast R-CNN

8.5.3. Faster R-CNN

8.5.4. Mask R-CNN

8.6. Single Shot Object Detector

8.6.1. SSD

8.6.2. YOLO

8.6.3. RetinaNet

8.6.4. CenterNet

8.6.5. EfficientDet

8.7. Backbones

8.7.1. VGG

8.7.2. ResNet

8.7.3. Mobilenet

8.7.4. Shufflenet

8.7.5. Darknet

8.8. Object Tracking

8.8.1. Classical Approaches

8.8.2. Particulate Filters

8.8.3. Kalman

8.8.4. Sort Tracker

8.8.5. Deep Sort

8.9. Deployment

8.9.1. Computing Platform

8.9.2. Choice of Backbone

8.9.3. Choice of Framework

8.9.4. Model Optimization

8.9.5. Model Versioning

8.10. Study: People Detection and Tracking

8.10.1. Detection of People

8.10.2. Monitoring of People

8.10.3. Re-Identification

8.10.4. Counting People in Crowds

Module 9. Image Segmentation with Deep Learning

9.1. Object Detection and Segmentation

9.1.1. Semantic Segmentation

9.1.1.1. Semantic Segmentation Use Cases

9.1.2. Instantiated Segmentation

9.1.2.1. Instantiated Segmentation Use Cases

9.2. Evaluation Metrics

9.2.1. Similarities with Other Methods

9.2.2. Pixel Accuracy

9.2.3. Dice Coefficient (F1 Score)

9.3. Cost Functions

9.3.1. Dice Loss

9.3.2. Focal Loss

9.3.3. Tversky Loss

9.3.4. Other Functions

9.4. Traditional Segmentation Methods

9.4.1. Threshold Application with Otsu and Riddlen

9.4.2. Self-Organized Maps

9.4.3. GMM-EM Algorithm

9.5. Semantic Segmentation Applying Deep Learning: FCN

9.5.1. FCN

9.5.2. Architecture

9.5.3. FCN Applications

9.6. Semantic Segmentation Applying Deep Learning: U-NET

9.6.1. U-NET

9.6.2. Architecture

9.6.3. U-NET Application

9.7. Semantic Segmentation Applying Deep Learning: Deep Lab

9.7.1. Deep Lab

9.7.2. Architecture

9.7.3. Deep Lab Application

9.8. Instantiated Segmentation Applying Deep Learning: Mask RCNN

9.8.1. Mask RCNN

9.8.2. Architecture

9.8.3. Application of a Mask RCNN

9.9. Video Segmentation

9.9.1. STFCN

9.9.2. Semantic Video CNNs

9.9.3. Clockwork Convnets

9.9.4. Low-Latency

9.10. Point Cloud Segmentation

9.10.1. The Point Cloud

9.10.2. PointNet

9.10.3. A-CNN

Module 10. Advanced Image Segmentation and Advanced Computer Vision Techniques

10.1. Database for General Segmentation Problems

10.1.1. Pascal Context

10.1.2. CelebAMask-HQ

10.1.3. Cityscapes Dataset

10.1.4. CCP Dataset

10.2. Semantic Segmentation in Medicine

10.2.1. Semantic Segmentation in Medicine

10.2.2. Datasets for Medical Problems

10.2.3. Practical Application

10.3. Annotation Tools

10.3.1. Computer Vision Annotation Tool

10.3.2. LabelMe

10.3.3. Other Tools

10.4. Segmentation Tools Using Different Frameworks

10.4.1. Keras

10.4.2. Tensorflow v2

10.4.3. Pytorch

10.4.4. Other

10.5. Semantic Segmentation Project. The Data, Phase 1

10.5.1. Problem Analysis

10.5.2. Input Source for Data

10.5.3. Data Analysis

10.5.4. Data Preparation

10.6. Semantic Segmentation Project. Training, Phase 2

10.6.1. Algorithm Selection

10.6.2. Education

10.6.3. Evaluation

10.7. Semantic Segmentation Project. Results, Phase 3

10.7.1. Fine Tuning

10.7.2. Presentation of The Solution

10.7.3. Conclusions

10.8. Autoencoders

10.8.1. Autoencoders

10.8.2. Autoencoder Architecture

10.8.3. Noise Elimination Autoencoders

10.8.4. Automatic Coloring Autoencoder

10.9. Generative Adversarial Networks (GANs)

10.9.1. Generative Adversarial Networks (GANs)

10.9.2. DCGAN Architecture

10.9.3. Conditional GAN Architecture

10.10. Enhanced Generative Adversarial Networks

10.10.1. Overview of the Problem

10.10.2. WGAN

10.10.3. LSGAN

10.10.4. ACGAN

TECH commits to purchasing a civil liability insurance policy to cover any eventuality that may arise during the course of the internship at the center"

Master's Degree in Computer Vision

Computer Vision has become a fundamental tool for the development of various industries, such as manufacturing, automotive, and security. In this context, it is essential that professionals are trained to develop and implement systems that can process, analyze, and make decisions based on visual information. The Master's Degree in Computer Vision from TECH is an excellent opportunity to acquire knowledge and skills in this field. This program, developed by a team of experts in the subject, offers comprehensive and up-to-date training in the use of Computer Vision technologies, focusing on topics such as object detection, facial recognition, pattern identification, and the automation of industrial processes.

Stay at the forefront of the latest advances in Computer Vision

The Master's Degree in Computer Vision uses a 100% online methodology, allowing you to study without interrupting your work or personal life. Additionally, the program provides numerous multimedia learning resources, such as practical exercises, technical videos, and masterclasses. Upon completing the program, you will be prepared to apply your knowledge in the industry and work in areas related to the development of advanced technological solutions and process automation.